In case you are wondering about the blue whale up there, it’s the logo of Docker signifying containers being delivered. Before we go onto all the techie stuff, let’s start with a short and sweet history lesson.

Evolution towards docker

Briefcase computers

Once upon a time we had desktop machines and everything resided on that one dreaded machine. With Laptops we were happy seeing we could pick up that otherwise big suitcase and take it wherever we wanted. To duplicate our environment though, we had all sorts of shortcuts but all of them were just to speed up the installation process where EVERTHING had to be installed on a clean slate.

VMs

Then some clever chaps found out a way to ‘virtualize’ the whole thing and called it ‘virtual environments’, VMs for short. Now on top of our main OS we could install a new ‘environment’. The interesting part was saving and transporting that VM. We could save these environments, copy them, share them, maintain histories of them, and life was merry.

VMs in the cloud

Then the cloud came along, another group of smarty pants had the idea of hosting these VMs as a cloud service. That meant, instead of me maintaining a machine with all I want, I can just ‘rent’ an environment for as long as I want and then give it back to them. That made creating environments and especially sharing them a lot more easier and very cost effective in some cases.

Docker comes along

Then some folks who probably loved whales (or it was just the designer) came up with another great concept. Instead of creating VMs which had their own OS and moving around large files, they could run the desired application on the host OS, but in “isolation”. That meant the host does not know about our running app nor does anything else on the host affect our app, which was kind of one of the main reasons for VMs. Interestingly, all of this was done through some existing concepts in the Linux filing system.

While almost all the aforementioned concepts are still in fashion these days in different shape or form, the point here is to shed some light on how it evolved.

About Docker

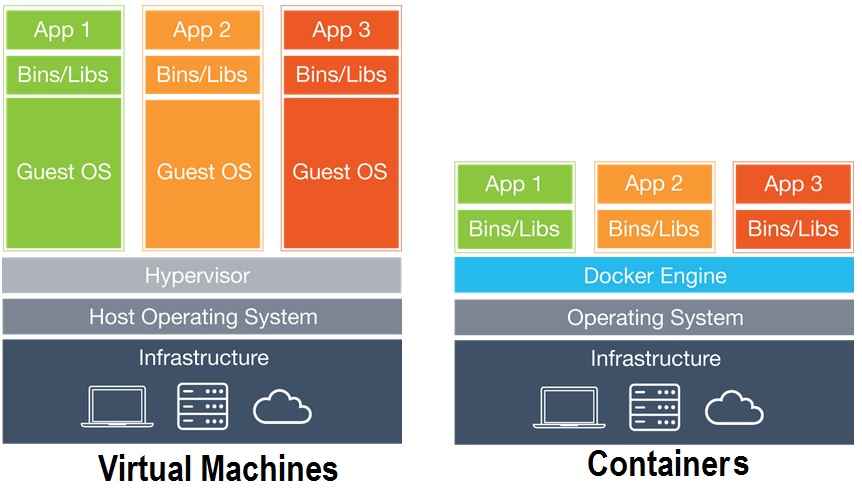

Essentially following are the few fundamental concepts to docker. First would be how docker compares with VMs in how it functions. This most commonly referred diagram illustrates it well:

In summary it means Docker environments, known as containers, utilize the host operating system to run the applications instead of creating a new guest operating system. At the same time, all out 3 apps in the image are running in isolation from each other and the host. This apparently not so significant detail has made a big difference.

Running Docker

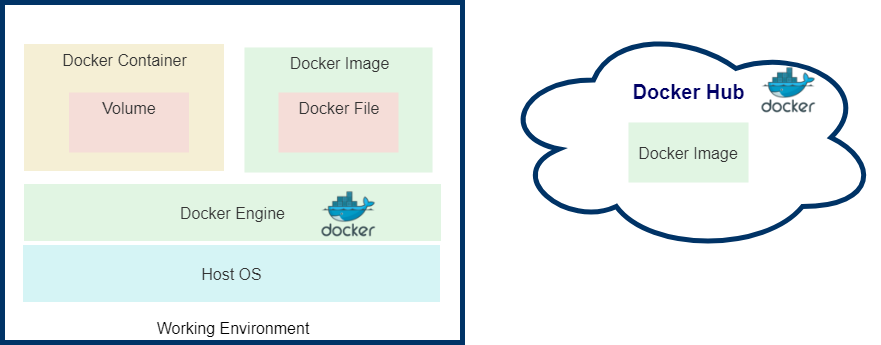

Walking through the basic steps of how a container is created would give a better idea into what docker does.

Docker Engine

As from the image above, the Docker engine is running on our work machine’s OS like any other software or VM player. The docker engine does all the magic of connecting our App with required OS resources and bridging communication between the container and the host.

Docker Image

The analogy of a class and an object would fit well here. The docker image is like an object-oriented class or like a template. The image itself has the required programs which can run on any host OS (since the docker engine is in between providing universal connectivity).

The image use used to create the actual containers which would run, from our analogy that would be like instantiating an object from a class.

Docker Hub

The image can be placed on your local machine / network or commonly placed on Docker Hub (https://hub.docker.com/). Most of the common software are placed by their vendors on the Docker hub site. From your machine’s command line, you can download a docker from directly from docker hub and create a container with it.

Docker File

The configurations of a docker image are placed in, what is called, a docker file. To keep it simple, the file has details about the image and its configuration. To create an image one would have to create a docker file specifying details about the image.

Docker Container

Now with the docker image (which has its own docker file), we will copy over the image onto our own machine. To create a container, we can either create one directly from the image file, or we can edit the image to our own needs, by updating the docker file, and then create an image from it.

From our class analogy, we can create an object from the class directly, or create a subclass inherited from the original class to add /edit any additional attributes we want and then create an object from the subclass.

Volume

An important and powerful concept in docker is Volumes. Every program running on a docker container would have some data to save. Each container keeps the data pertaining to that container separately in what is called ‘volumes’. Every container has one volume by default. Once the container is destroyed, it’s volume goes with it.

Things get interesting from here. Volumes can be ‘shared’, meaning we can have volumes which can persist even after the container is destroyed. This way, the same dataset can be used by ‘many’ containers.

Benefits for automation engineers and testers

The benefits of using docker for testers are great. I’m not going into detail of them, but just hinting to a few here.

If your AUT already has a micro-service architecture, your team would already be using docker most probably, and it would only make sense for you to do so.

Creating new environments

Creating new test environments and tearing them down becomes child’s play once things are in place. It’s just a matter of running a simple docker command from the CLI to create a new container from a saved image of your AUT.

Saving app state

Saving state of the AUT becomes a lot easier with containers as well. We can save volumes of the AUT with desired test data to start from the same AUT state. For application where reproducing issues from the field is a pain, this can be a blessing.

In case your AUT does not have a micro-service architecture, still you can create steps to run your AUT’s certain portions, like the DB, in a docker container and snapshot your data at required states. This would vary a lot from case to case on how to implement this.

Multiple execution environments

For automation folks, the way we can create multiple AUT environments, we can create multiple automation execution environments as well. If your automation tool has a docker image (most open source tools have one), it becomes so easy to create multiple execution environments in real time and once the tests are done can tear down them.

Leave A Comment