Automation Test Data Management

An important factor in automation framework design is managing your test data. Mismanaged test data for complex applications, for instance an ERP, could make life miserable. I often see teams not putting enough focus on this making their automated scripts flaky .

Understand the product

Certain traits are common between application types, ERPs would have very complex test data, SaaS applications providing simple services, might have limited test data requirements and so on. However, it is paramount to understand

1. your application and the kind of data the tests might need.

2. Different methods through which the data can be created and removed (front end, web services, SQL scripts).

3. The amount of test data you would need per test, I automated products having 5 – 10 fields per test and 150 – 300 fields per test too. A large amount of data would have a VERY AMUSING impact on your tests.

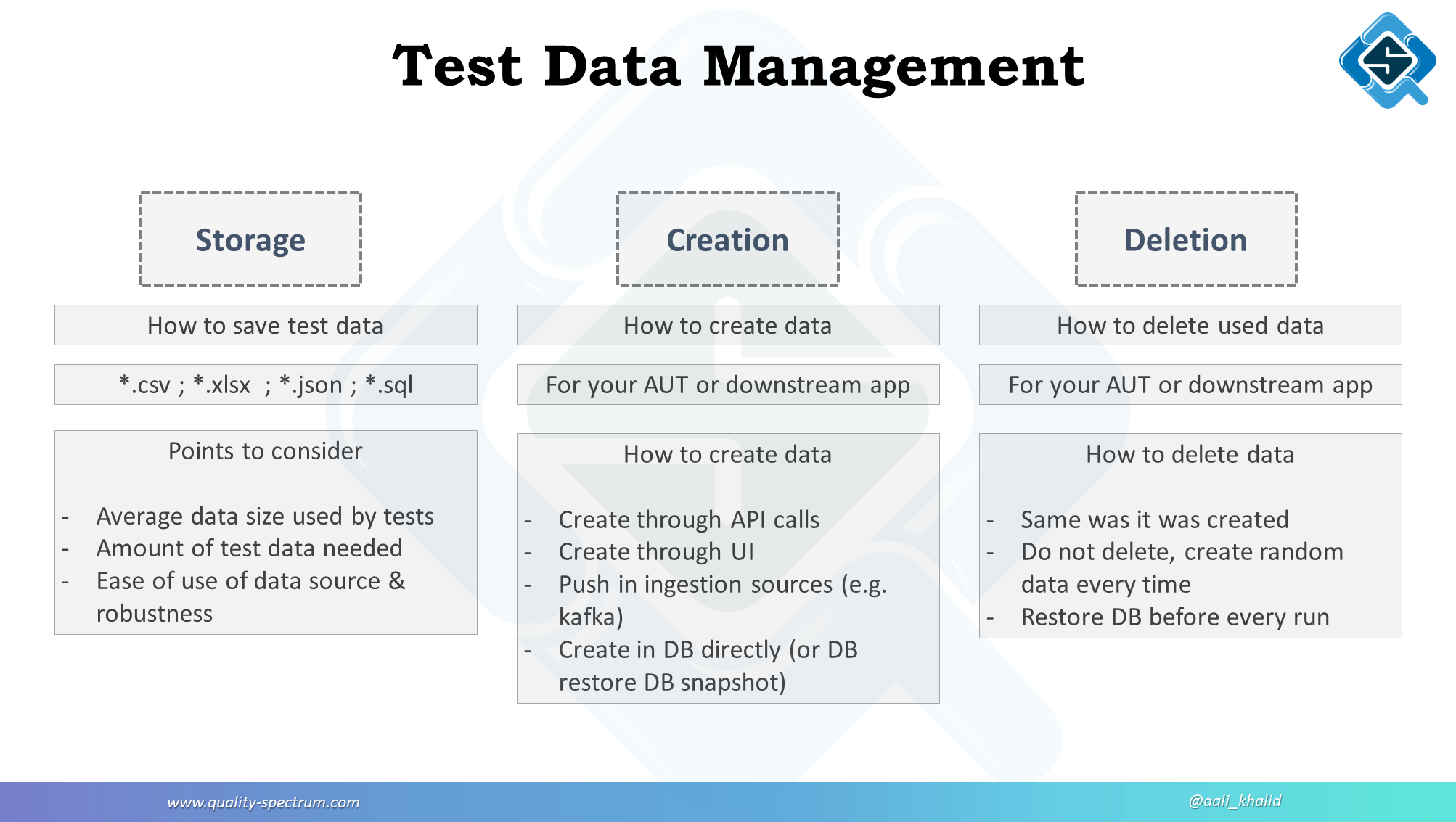

Components of test data management

There are three main things to consider for your test management strategy. You can use different patterns which will use a combination of these factors.

- Storing test data

- Creating test data

- Cleaning test data

The image below will give you a quick summary

Storing data

what test data you scripts needs would have to be stored somewhere. Teams start with writing needed data within the scripts, then into excel files. I personally am not a big fan of writing data in excel, but if it serves the purpose then fine.

Creation & deletion strategies

You might want to create test data for the AUT (Application under test) or a downstream application which in turn will be used by your AUT. There are many ways in which data can be created / deleted and here are some common ones.

Through API

Create needed data by calling the application’s API. This is relatively a more robust way and less time consuming. However sometimes some applications do not services developed in an intuitive manner making it difficult to use them. Secondly stability of these services can be an issue depending on what infrastucture / or environment the application is running on. Deletion might be a bit trickier here.

If you have a legacy application and the services are not not built very well, a ‘test data creation’ wrapper service can be created to facilitate that.

Through UI

Create data by running the flow in the UI. Thankfully I’ve never had to do this in any project I worked on, however have seen some folks doing this. Not recommended at all.

In the database

Another way is to create needed data in the database of directly. Writing SQL statements to create data needed can be tricky, the business logic might keep changing and you might run into issues of not creating data the way the application current version is supposed to.

Deletion might be easier, but again you’d want to be sure your not disturbing the process flow of the application.

Deletion of data

A lot of folks just don’t delete data, and create new records every time. Might not have adverse affects in the short run, but you should have a purging strategy to remove all that data.

In some teams I’ve seen the restore to an old baseline state after few months which could be an option.

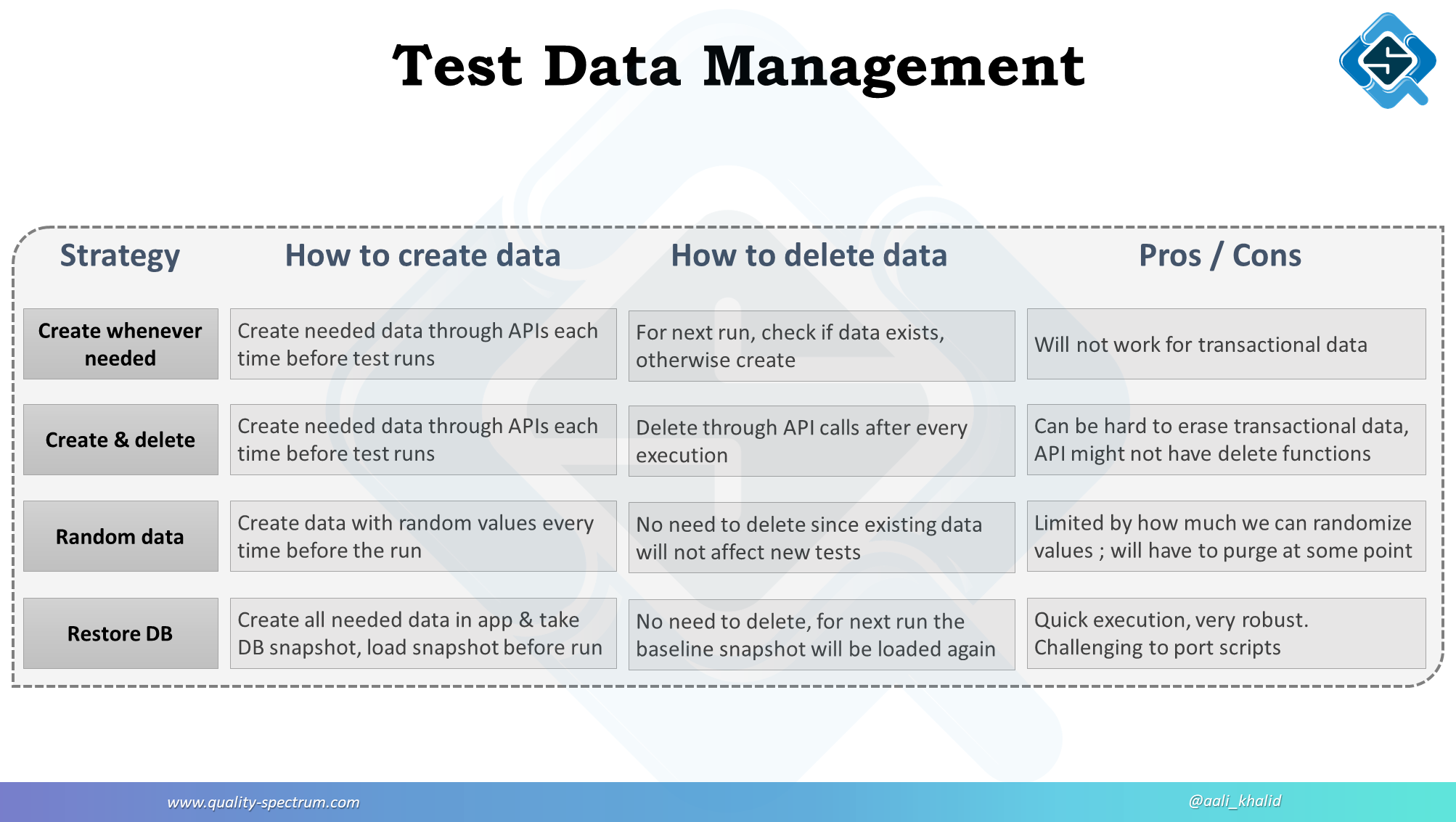

Design patterns

There are some common design patterns teams follow to manage their test data. A summary of the few discussed in the image:

Create & delete

The no brainer method is to create a specific record each time you run the test. Creation can be done through, again, Front end UI, web services or scripts adding rows to the database. The bright side here is it’s easy to debug and update. If this can be done in a very reliable and efficient way, no harm in using this.

However I’ve noticed many times reams under-estimate the problems they’ll run into while using this and eventually end up creating flaky tests. Make sure to have a robust creation mechanism and deletion strategy.

Create records needed

Slightly different from the one above, don’t attempt to delete data. Just keep creating new records. Again if that can work for your application, no harm. However it’s likely if you have a lot of transactional data in your application this might not work. If you don’t delete records and keep creating new ones eventually you might run into no more room to create new records due to how data is processed in the AUT.

Even if you don’t have to delete, you’ll have to purge them at some point in time.

Random data

Some might not like the ‘delete at the end’ part, I don’t! Sometimes cleaning test data feels like getting that body fat off, every time we reach a great formula to solve the problem, it finds a new way to ‘stick around’.

The idea for random data is creating a new record every time we run the test. This way the record created last time does not affect our test. Since silver bullets do not exist, random data also has problems. Firstly, creating truly random data. This is more of a math problem, most algorithms tend to start repeating a pattern and the string generated is no longer as ‘random’. Secondly, if we keep adding body fat, eventually organs start to fail. Similarly, the test environment will over load reaching a point where it becomes unmanageable. We might have to get liposuction at some stage then!

Dependent data

For x number of tests, you would need x test data creation methods. Some tests can use data created by the previous test. For instance, a shopping cart application test case verifying a cart payment would need test data adding products to the cart first. Instead of adding data as a pre-requisite to this test, use data created by the test adding a product to the cart.

Main drawback here, if the previous test fails, the next test will fail due to the previous test which we do not want.

Add to AUT

Some tests do not perform transactions on any record, which means the data remain in the same state, before and after the test. Such tests can use data created in the application once, and no need to create or clean again.

This method has no real drawbacks that I came across (yes, sort of a silver bullet). However, you would not find many test scenarios where test data does not change during the test. Where this can work, the best strategy to use.

Restore DB

The last and most favorite of mine, have a baseline version of the AUT. Create all needed data for each script separately and take a snapshot of the database. Before each execution, restore the DB. This way you;ll not have to create data or remove it at the end.

The process is a bit lengthy, but if you have lots of transactional data, this will work like a charm.